Category: "Uncategorized"

2010-01-18

Layers: Cross-Platform Acceleration

So, it's been a while since I've given everyone here an update, and for that I do apologize. First of all, Direct2D support is coming well, we're working hard to land it on trunk. I've been getting swift reviews on all my code from a lot of different people, which is great, but it will still take some time since the code is complex and touches a lot of the Mozilla tree. Once it lands it will be disabled by default, but people will be able to easily enable it in their firefox nightly builds! Ofcourse you can follow the status of this work by keeping track of bug 527707. It's not perfect yet, but once again, thanks to all who've given me all the great feedback to get this to a point where it's quite usable!

Layers

So, now to the actual title of this post. Layers. Layers are another API we are designing for Mozilla, which can be used to hardware accelerate certain parts of website rendering. Normally this is where I would start a long rant on why hardware acceleration is such a good thing, but since I've already done that 2 posts ago, I'll just refer you there.

First of all it is important to point out that Layers is by no means a replacement for Direct2D. Direct2D accelerates all rendering, from fonts to paths and all such things, layers is intended to allow accelerated rendering and blending of surfaces. A layer could be accessible as many different type of cairo surfaces, possible D2D, but also any other. What this means if the layer system is designed to be easily implemented on top of different APIs, Direct3D (9 or 10) but also OpenGL. This means that we hope this to provide a performance increase for users on Mac and Linux as well.

So what are these layers?

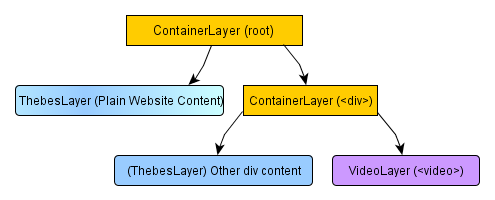

Essentially, they're just that, layers. Normally a website will directly be rendered, as a whole, to a surface (say a window in most cases). This single surface means that unless the surface itself accelerates certain operations internally, it is hard to apply any hardware acceleration there. The layer system starts out with a LayerManager, this layermanager will be responsible for managing the layers in a certain part of screen real estate(say a window). Rather than providing a surface, the layer manager will provide layers to someone who wants to draw, and the API user (in this case our layout system) can then structure those in an ordered tree. By effectively rendering specific parts of a website (for example transformed or transparent areas) into their own layer, the layermanager can use hardware acceleration to composite those areas together effectively. There can be several types of layers, but two layers really form the core of the system: ThebesLayers and ContainerLayers.

ContainerLayers

So, container layers also live up to their name. They are a type of layer which may contain other layers, their children. These types of layers basically form the backbone of our tree. They do not themselves contain any graphical data, but they have children which are rendered into their area. It can for example be used to transform a set of children in a single transformation.

ThebesLayers

Now, these sound a bit more exotic. Thebes is our graphics library which wraps cairo. A thebes surface is a surface which can be drawn to using a thebes context. ThebesLayers are layers which are accessible as a thebes surface, and can therefor be easily used to render content to. This could for example also be a Direct2D surface. ThebesLayers form leafs of our tree, they contain content directly, and do not have any children. However, like any other layer, they may have transformations or blending effects applied to them.

Other types of layers

Why would we have other types of layers you might ask? Well, there's actually several reasons. One of the layers we will be having are video layers, the reason is that video data is generally stored not in the normal RGB color space, but rather as YUV data(luminescence and chromatic information, not necessarily at the same resolution). As with any pixel operation, our graphics hardware is especially good at converting this to the RGB type of pixels we need on the screen, therefor this layer can be used specifically for video data. Another layer we will have is a hardware layer, this type of layer contains a surface which exists, and is accessible, solely on the hardware. These would be useful for example for WebGL, where we currently have to do an expensive readback from the graphics hardware to get a frame back into software. Using the hardware layer could then blend that WebGL content directly on the graphics hardware, skipping the intermediate copy to the normal memory.

So, now that we know that, a layer tree for a website with a div in it, in which there's a video and some other content, may look like this:

Simple example(actual results may differ)

Other advantages

There is a long list of other advantages we hope to achieve with this system. One of them is that the rendering of the layer tree could occur off the main thread. This means that if we integrate animations into the system, the main thread could be busy doing something(for example processing JavaScript), but the animations will proceed smoothly. Another advantage is that it offers us a system to retain part of our rendered websites, also, for example, parts which aren't in the currently viewed area. This could bring advantages especially where the CPU speed is low (for example mobile hardware), but we still want smooth scrolling and zooming.

Conclusions

Well, that explains the very basics of the layers system we're working on. Robert O'Callahan has already been doing all the hard work of writing the very first API for layers, as well as a preliminary integration into our layout system! See bug 534425 for our progress there. Additionally I've been working on an OpenGL 2.1 and D3D10 implementation of the API so far. I had a lot of feedback of people who were disappointed we were not offering something like Direct2D on other platforms. We've not given up on bringing something like that to other platforms. Layers should however, with less risk and work, bring a significant amount of hardware acceleration to other platforms already! I hope you enjoyed reading this and I've informed you a little more about our latest work in this area, and ofcourse reassured you that we're doing everything we can to bring performance improvements to as many users as possible.

2009-11-25

Firefox and Direct2D: Performance Analysis

So, it seems my little demo of a pre-alpha firefox build with Direct2D support has generated quite some attention! This is good, in many ways. Already users trying out the builds have helped us fix many bugs in it. So I'm already raking in some of the benefits. It has also, understandably, led to a lot of people running their own tests, some more useful than others, some perhaps wrong, or inaccurate. In any case, first of all I wanted to discuss a little on how to analyze D2D performance with a simple firefox build.

Use the same build

Don't compare a random nightly to this build in performance. The nightly builds contain updates, no two nightly builds will be the same, and therefor perform the same. I don't continuously keep my D2D builds up with the latest repository head. Additionally the build flags used may very well not be the same, for example some builds(like the nightlies) may be built with something called Profile Guided Optimization, which means the compiler analyzes hotspots, and optimizes them. This significantly improves their JavaScript performance. My test build is not build with PGO, although I might release a build using PGO as it gets more stable. This is probably causing some of the differences some people testing have been seeing. Please keep in mind that because this is not a final, complete build, it should probably not be compared too enthusiastically to other browsers either.

If you want to properly compare performance, use my build, and switch on the forcegdi pref. Go to 'about:config' and look for the font.engine.forcegdi pref, and set that to true. After that, you will have a build using GDI only.

Obviously I should have mentioned this in my previous post, so people would not have wasted their time on inaccurate performance analysis. I apologize for that, it is partially because I had not expected so much publicity.

Focus on what (should be) different

When you do run tests, it is of course always valuable to get measurements on the overall performance of the browser. If there's some other part of the browser than rendering showing performance decreases, I'm doing something wrong and please do let me know! There is however, a lot of more involved with displaying and parsing a webpage than just the rendering. If you want to get a really good idea on what it does to your rendering speed, you'll want to measure solely the time it takes to do the actual drawing. We have a non-cross-browser tool in Mozilla in my build that will allow you to do this. You can add a 'bookmarklet' that runs this test, just add a bookmark, and make it point to 'javascript:var r = window.QueryInterface(Components.interfaces.nsIInterfaceRequestor).getInterface(Components.interfaces.nsIDOMWindowUtils).redraw(500); alert(r + " ms"); where the number inside 'redraw' is the number of rendering runs you want to time. It will then pop up an alert box which will tell you the time in milliseconds it took to execute the redraws. Keep in mind this still only analyzes static rendering performance.

Know what you're measuring, and how you're doing it

If you are using an independent measurement benchmark. Be sure you understand well exactly what it measures, and how it does it. This is a very important step. Something spewing out a number and then listing 'higher is better' for a certain part of functionality is great, but it only becomes useful information when you know how the measurement is executed, what it's margin of error is, and what the overhead is it adds to the whatever it is testing. For a lot of all-round browser benchmarks, rendering is only a small part of what's tested. And the total test result differences between D2D enabled and disabled, may not reflect the actual difference in user experience.

Considerations on static page-loading measurements

Since obviously just a rendering improvement if it doesn't actually function better for the end user is practically useless, keep in mind that when you're timing how long it takes to load a page, you're timing all the aforementioned overhead. When interacting with the page without switching to another page, a lot of this overhead does not occur, and a large part of time might be spent in actual rendering. This means that during dynamic interaction (like scrolling), improvements may be more noticeable, although harder to measure.

And finally, thanks!

It's been great to see how many people are trying this, and as I mentioned earlier in this post, it has already greatly assisted us. It's also great that people are working on their own tests of performance and such things, it is always a good thing to have independent performance tests ran. And this too, will help us improve on the build in areas where our own testing may have been lacking. So, thanks, to everyone who is directly or indirectly helping to hopefully provide a great new feature!

2009-11-22

Direct2D: Hardware Rendering a Browser

A short while ago I wrote about my work on DirectWrite usage in Firefox. Next to DirectWrite, Microsoft also published another new API with Windows 7 (and the Vista Platform Update), called Direct2D. Direct2D is designed as a replacement for GDI and functions as a vector graphics rendering engine, using GPU acceleration to give large performance boosts to transformations and blending operations.

Why GPU acceleration?

First of all why is GPU acceleration important? Well, in modern day computers, it's pretty common to have a relatively powerful GPU. Since the GPU can specialize in very specific operations (namely vertex transformations and pixel operations), it is much faster than the CPU for those specific operations. Where the fastest desktop CPUs clock in the hundreds of GFLOPS(billion floating point operations per second), the fastest GPUs clock in the TFLOPS(trillion floating point operations per second). Currently the GPU is mainly used in video games, and its usage in desktop rendering is limited. Direct2D signifies an important step towards a future where more and more desktop software will use the GPU where available to provide better quality and better performance rendering.

Direct2D usage in Firefox

A while ago I started my investigation into Direct2D usage in firefox (see bug 527707). Since then we've made significant progress and are now able to present a Firefox browser completely rendered using Direct2D, making intensive usage of the GPU (this includes the UI, menu bars, etc.). I won't be showing any screenshots, since it is not supposed to look much different. But I will be sharing some technical details, first performance indications and a test build for those of you running Windows 7 or an updated version of Vista!

Implementation

Direct2D has been implemented as a Cairo backend, meaning our work can eventually be used to facilitate Direct2D usage by all Cairo based software. We use Direct3D textures as backing store for all surfaces. This allows us to implement operations not supported by Direct2D using Direct3D, this will prevent software fallbacks being needed, which will require readbacks. Since a readback forces the GPU to transfer memory to the CPU before the CPU can read it, readbacks have significant performance penalties because of GPU-CPU synchronization being required. On Direct3D10+ hardware this should not negatively impact performance, it does mean it is harder to implement effective D2D software fallback. Although in that scenario we could continue using Cairo with GDI as our vector graphics rendering system.

Internally here's a rough mapping of cairo concepts to D2D concepts:

cairo_surface_t - ID2D1RenderTarget

cairo_pattern_t - ID2D1Brush

cairo clip path - ID2D1Layer with GeometryMask

cairo_path_t - ID2D1PathGeometry

cairo_stroke_style_t - ID2D1StrokeStyle

More about the implementation can be learned by looking at the patches included on the bug! Now to look at how well it works.

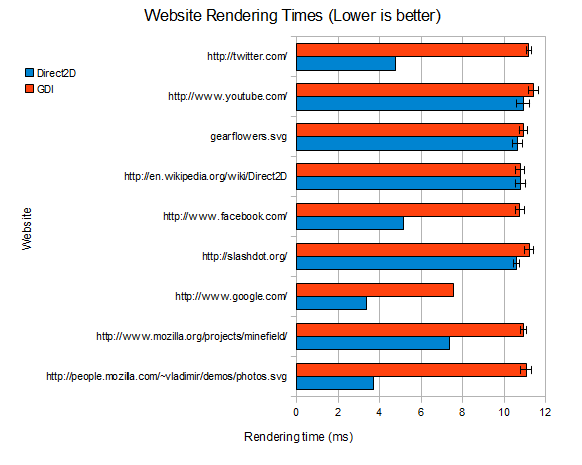

Website Benchmarks

First of all let's look at the page rendering times. I've graphed the rendering time for several common websites together with the error margin of my measurements. The used testing hardware was a Core i7 920 with a Radeon HD4850 Graphics card:

There's some interesting conclusions to be drawn from this graph. First of all it can be seen that Direct2D, on this hardware, performance significantly better or similarly on all tested website. What can also be seen is that on complexly structured websites the performance advantages are significantly less, and the error margin in the measurements can be seen to be larger (i.e. different rendering runs of the same site deviated more strongly). The exact reasons for this I am still unsure of. One reason could be is that the websites contain significant amounts of text or complex polygons as well, for those scenarios with few transformations and blending operations the GPU will show smaller advantages over the CPU. Additionally the CPU will be spending more time processing the actual items to be displayed, which might decrease the significance of the actual drawing operations somewhat.

Other Performance Considerations

Although the static website rendering is an interesting benchmarks. There are other, atleast as important considerations to the performance. As websites become more graphically intense dynamic graphics will start playing a larger role. Especially in user interfaces. If we look at some interesting sites using fancy opacity and transformation effects(take for example photos.svg), we can see that D2D provides a much better experience on the test system. Where on sizing up photos GDI will quickly drop in framerate to a jittery experience, Direct2D will remain completely smooth.

Another interesting consideration is scrolling. Since on scrolling only small parts of the website need to be re-drawn, it has the potential of creating a much smoother scrolling experience when using Direct2D. This is also the feedback we've received from people utilizing the test builds.

Conclusions

Although the investigation and implementation are still in an early stage, we can conclude that things are looking very promising for Direct2D. Though older PCs with pre-D3D10 graphics cards and WDDM 1.0 drivers will not show significant improvements, going into the future most PCs will support DirectX 10+. PCs in the future could allow providing extremely smooth graphical experiences for web-content like SVG or transformed CSS. Interestingly, Microsoft has also announced IE9 will feature Direct2D support as well only shortly ago. Feel free to download and try a build of Firefox with Direct2D support here. There are several known issues and in some cases some rendering artifacts may appear. In general it should be quite usable on D3D10 graphics cards. It may or may not work on D3D9 graphic cards, depending on exact graphics card specifications.

Well, that's it for now, I hope I've given you an interesting first glance into the future of desktop graphics.

NOTE: If you want to do your own performance analysis, please see my other blogpost about the subject here

NOTE2: For those not used to running experimental builds. If you would just execute this .exe while having normal firefox running. You will get another window of your normal firefox. You need to either close your normal firefox, or run it from the command line on a different profile, using: 'firefox -no-remote -P d2d'.

27/10/09

Font Rendering: GDI versus DirectWrite

So, this is where I give my first blog post a shot. Let's see how it goes. So, I've been working for Mozilla on implementing DirectWrite as a Cairo and gfxFont backend, in order to investigate the differences, see bug 51642. DirectWrite is a new font rendering API that was released with Windows 7, and will be included in the Vista platform update for Windows Vista SP2. It offers a number of advantages over the old GDI font rendering system, which I will attempt to elaborate on. The main advantages that it has over GDI are:

- Sub-pixel shaping and rendering

- Bi-directional anti-aliasing. (GDI only supported horizontal anti-aliasing)

JDagget already has a nice post on CFF font rendering here. I'm hoping to give some additional information here.

First of all let's look at the W as found on the front page of the Mozilla Minefield start page:

| GDI | DirectWrite |

|  |

|  |

First of all it can be seen that the subpixel anti-aliasing is 'softer' in the DirectWrite version. Where in GDI the cleartype anti-aliasing introduces a fairly strong colorization (the left edge is clearly yellowish, the right side is clearly purple-ish), the DirectWrite version introduces a more natural color transition with the sub-pixel anti-aliasing.

Another difference that can be seen are the serifs at the top of the W, there the advantage of vertical anti-aliasing can clearly be seen, where in the GDI version the serif is really mostly a block of 2 pixels high, the vertical anti-aliasing in the DirectWrite version introduces the actual curve expected in a serif.

Another big advantage of using DirectWrite can clearly be seen when we look at transformed texts. The following are different transformation situations, comparing the results between DirectWrite and GDI:

| GDI | DirectWrite |

|  |

|  |

|  |

In the first example it can clearly be seen that GDI has clear aliasing and placement issues when rendering transformed fonts which DirectWrite doesn't suffer from. A displacement in the line of the 'B' from button can clearly be seen. Also the lack of vertical anti-aliasing is clearly visible in the transformed setting where the main lines of the font are diagonally placed.

The second image again illustrates the improved subpixel anti-aliasing performance in DirectWrite. The DirectWrite case looks quite good, whereas in the GDI case the text is barely legible. The anti-aliasing issues GDI has here are clearly visible in the i in 'becoming' where it actually shows as a red letter because of inaccurate sub-pixel usage. Additionally several lines and curves of the letters are simply not visible in the GDI case.

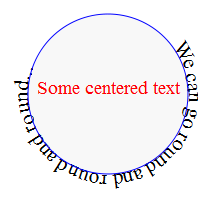

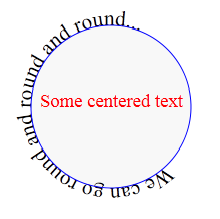

The last image in this series shows another interesting property. The most notable effect in this image is the pixel snapping occuring on the letters by GDI. It can clearly be seen that the letters are snapped to whole pixels on the circle. Where in the case of untransformed text the baseline is completely aligned on pixels, this is not a big issue. In the case of the circular baseline, this means the 'O' of the second case of 'round' actually floats above the line, and the 'e' in the first 'We' is actually floating above the baseline. In the DirectWrite version the benefit of the subpixel positioning can clearly be seen as the text is nicely aligned along the circle.

The advantages of subpixel positioning are not limited to transformed text though, below is an example of how subpixel positioning can improve the kerning of text.

| GDI | DirectWrite |

|  |

In the GDI example it is clear that the spacing between the E and the V is rather odd. You can see this effect if you go to the Mozilla website and slowly, pixel by pixel, decrease the horizontal size of the window. You can see the letters 'dance around' with respect to eachother as each individual glyph's origin gets snapped to a vertical pixel row. When using DirectWrite, subpixel placement means the glyphs are spaced identically at all window sizes.

The final screenshots I'd like to show are a general look at the browser UI:

Now this is where DirectWrite arguably does not perform better. The DirectWrite UI clearly looks 'lighter' than the GDI UI. And although the curves and letters are smoother. At the font size and DPI in the situation of the screenshots it is likely very personal as to which one you feel is better. One advantage that DirectWrite does have is the superior subpixel anti-aliasing again. In the GDI example there's clear colorization at the horizontal boundaries of the lines. DirectWrite does not suffer from this, a final image illustrates this:

I hope I've given everybody reading this a bit of an idea of the quality differences between GDI and DirectWrite. The last point I'd like to mention briefly is performance. The current implementation primarily uses draws to a Direct2D surface created using CreateDCRenderTarget, this means that for every font operation, Direct2D will rebind the DC render target to a GDI surface. For a DCRenderTarget Direct2D internally does as much hardware acceleration as possible, and then blits that surface to the software GDI surface when done. As a fallback it can draw to a DirectWrite GDI interop surface. But this codepath should usually not be used.

When using the Direct2D method performance at the moment is slightly worse than in GDI. Interestingly enough CPU usage does not saturate when actively continuously re-rendering fonts, this is most likely due to the font rendering blocking waiting for hardware rendering operations to finish. Making the rendering thread regularly relinguish some of its time slice as it starts waiting for the display hardware. Part of the decrease in performance can probably be attributed to the fact DirectWrite simply does more work that GDI does. Should certain features be disabled it will probably perform better. Additionally a more clever way should be devised to manage the D2D surfaces, in theory each gfxWindowsSurface could have a D2D DCRenderTarget, and keep hold of that, preventing the surface binding currently needed on every single font drawing operation.

Well, that's about it for this post. I hope my first attempt at this sort of post is an interesting read for everyone! I hope to be able to share more information in the near future. Oh, and before I forget! There's a try-server build that will use DirectWrite on Windows 7, feel free to try it on your favourite font stress-test!

23/10/09

Starting a blog ...

So, it seems it's finally happened, I've decided to start a blog. Interestingly enough I've never really taken a lot of interest in things like blogging, microblogging, social networking or any other one of those popular internet activities. I don't have a facebook account, I've probably seen the twitter website about 3 or 4 times in my entire life. To be honest, if a year or so ago anyone would have asked me what the 10 things were I'd be least likely to do, starting a blog would probably rank somewhere high up in that list. So the question is, what brought about that amazing turnaround.

Well, interestingly enough, people who know me personally know I have strong opinions on nearly everything conceivable. From politics to social interaction, I'm always up for a good discussion, and usually I'll be quite convinced I'm right as well. So, in theory that would mean I'd be quite inclined to start a blog, after all, no better way to vent your opinion to a large group of people! The problem is, opinions change, and the internet has a tremendous memory, I'm really not so sure I want the world to remember my current opinions and thoughts 20 years from now.

This brings me to one of the reasons I'm not into a lot of these social internet activities. Normal social interaction, odd behavior, stupid actios, etcetera, exists in one point in time. You can get completely wasted and behave like a total idiot, but unless you do something stupid enough to hit the newspapers, the only record of this will be in the minds of people. You can then later say things like 'it wasn't that bad' or 'that's not how it happened', and noone would ever be able to fundamentally prove what you really did. The internet is a completely different world! Pretty much anything you do, no matter how silly, impulsive or stupid will be recorded and distributed to countless data storage devices. Yes, you better be real careful with what you do out there!

The second reason is much more simple. I'm not actually that interested in what other people do in their social lives, that is not to say I don't care about other people, but if they want to share their social life and possibly hear my opinion I'm sure they'll tell me about what they did last week face-to-face. One of the few twitter messages I ever read was from a friend and contained the following information: 'drinking a good glass of red wine while cooking'. Although ofcourse that information is very interesting, considering the limited amount of time one has in life, there really is more interesting information out there.

Finally I don't think people are that interested in what I do. My life isn't, and has never been that interesting, I do a lot of fun things, but I don't see how anyone else would benefit by reading about them.

So, the question asked remains, why a blog then? Well, the first point stands, but I suppose I'll just have to make sure I don't post too radical thoughts, and try not to post anything too stupid. Althought this is a hard task for me I think I'll manage that. As to the second point, I've noticed that although I'm still not interested in specifically what wine someone is drinking, or the tricks they can make their dog do, there's actually a fair deal of people posting much more informative and interesting things on their blogs. Making it actually an interesting way to keep up to date with the latest technical innovations people are working on.

This brings us to the last point, and with that the reason I myself decided to start this blog. I recently started working as a contractor for the Mozilla Corporation. Very quickly after I started I got asked 'Let me know if you do a blog post, I'd love to see some screenshots...' And I concluded that I was doing things people would like to read about, evaluate and give useful feedback on.

And there's the reason, I hope that this way I can publish about technical innovations that I'm working on. Hopefully that way I can make use of the collective knowledge of people reading about my work and get feedback where I could improve on the things I'm doing or what alternative directions should be explored. Why this long rant then about why I'm starting a blog? Well, I guess I just wanted to justify things as not to look a fool to all those people I've always told I'd NEVER start a blog. :)